Give a dog a bone?

From the Herald (via Mark Hanna)

Warnings about feeding bones to pets are overblown – and outweighed by the beneficial effect on pets’ teeth, according to pet food experts Jimbo’s.

and

To back up their belief in the benefits of bones, Jimbo’s organised a three-month trial in 2015, studying the gums and teeth of eight dogs of various sizes.

Now, I’m not a vet. I don’t know what the existing evidence is on the benefits or harms of bones and raw food in pets’ diets. The story indicates that it’s controversial. So does Wikipedia, but I can’t tell whether this is ‘controversial’ as in the Phantom Time Hypothesis or ‘controversial’ as in risks of WiFi or ‘controversial’ as in the optimal balance of fats in the human diet. Since I don’t have a pet, this doesn’t worry me. On the other hand, I do care what the newspapers regard as reliable evidence, and Jimbo’s ‘Bone A Day’ Dental Trial is a good case to look at.

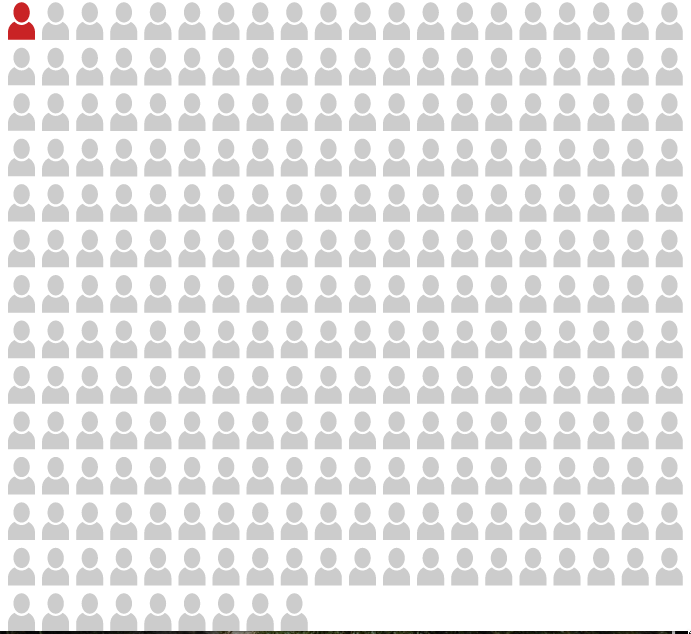

There are two questions at issue in the story: is feeding bones to dogs safe, and does it prevent gum disease and tooth damage? The small size of the trial limits what it can say about both questions, but especially about safety. Imagine that a diet including bones resulted in serious injuries for one dog in twenty, once a year on average. That’s vastly more dangerous than anyone is actually claiming, but 90% of studies this small would still miss the risk entirely. A study of eight dogs for three months will provide almost no information about safety.

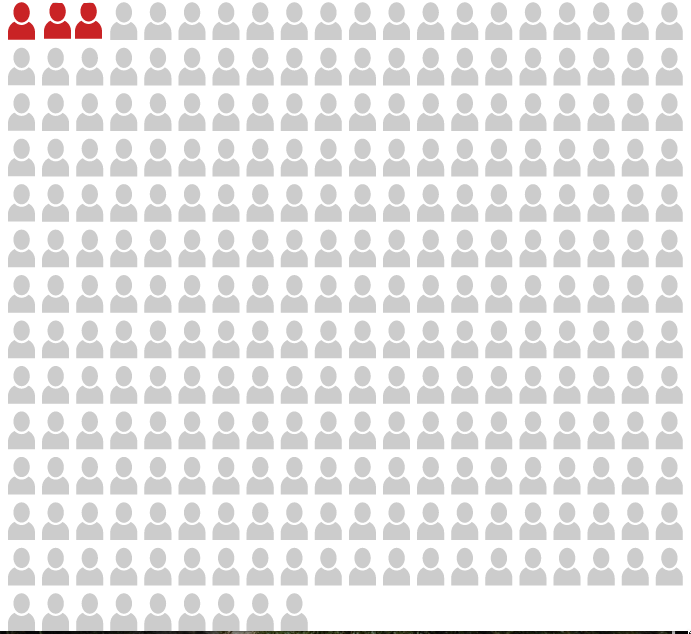

For the second question, the small study size was aggravated by gum disease not being common enough. Of the eight dogs they recruited, two scored ‘Grade 2’ on the dental grading, meaning “some gum inflammation, no gum recession“, and none scored worse than that. Of the two dogs with ‘some gum inflammation’, one improved. For the other six dogs, the study was effectively reduced to looking at tartar — and while that’s presumably related to gum and tooth disease, and can lead to it, it’s not the same thing. You might well be willing to take some risk to prevent serious gum disease; you’d be less willing to take any risk to prevent tartar. Of the four dogs with ‘Grade 1: mild tartar’, two improved. A total of three dogs improving out of eight isn’t much to go on (unless you know that improvement is naturally very unusual, which they didn’t claim).

One important study-quality issue isn’t clear: the study description says the dental grading was based on photographs, which is good. What they don’t say is when the photograph evaluation was done. If all the ‘before’ photos were graded before the study and all the ‘after’ photos were graded afterwards, there’s a lot of room for bias to creep in to the evaluation. For that reason, medical studies are often careful to mix up ‘before’ and ‘after’ or ‘treated’ and ‘control’ images and measure them all at once. It’s possible that Jimbo’s did this, and that person doing the grading didn’t know which was ‘before’ and which was ‘after’ for a given dog. If before-after wasn’t masked this way, we can’t be very confident even that three dogs improved and none got worse.

And finally, we have to worry about publication bias. Maybe I’m just cynical, but it’s hard to believe this study would have made the Herald if the results had been unfavourable.

All in all, after reading this story you should still believe whatever you believed previously about dogfood. And you should be a bit disappointed in the Herald.