How many Covid cases?

From Hannah Martin at Stuff: Only 35% of Covid cases being reported, ministry says, after earlier saying it was 75%

The ministry’s latest Trends and Insights report, released on Monday, said “approximately three quarters of infections are being reported as cases”, based on wastewater testing.

However, it has since said that “based on updated wastewater methodology”, about 35% of infections were reported as cases as of the week to November 2.

This is a straightforward loss during communication: the 75% was an estimate of how much the reporting had changed since the first Omicron peak, but it got into the Trends and Insights report as an absolute rate. Dion O’Neale is quoted further down the story explaining this.

For future reference, it’s worth looking at what we can and can’t estimate well from various sources of information we might have.

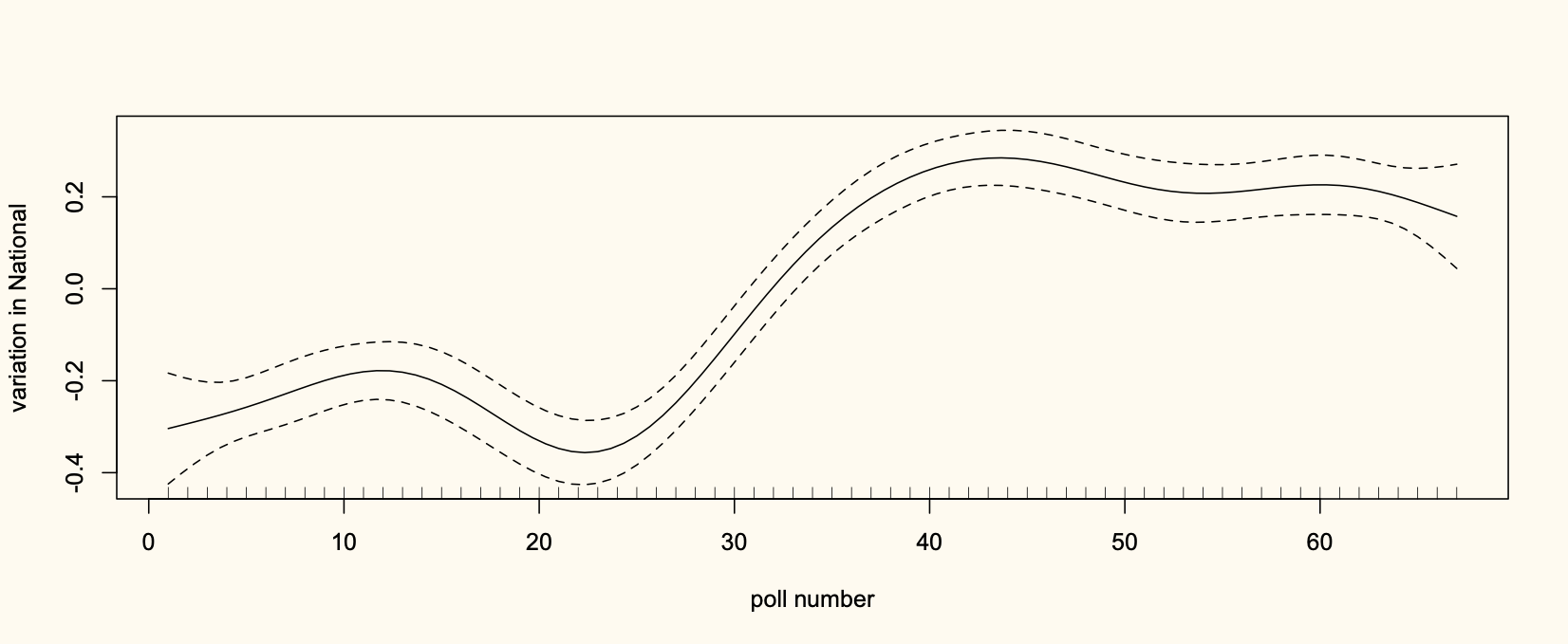

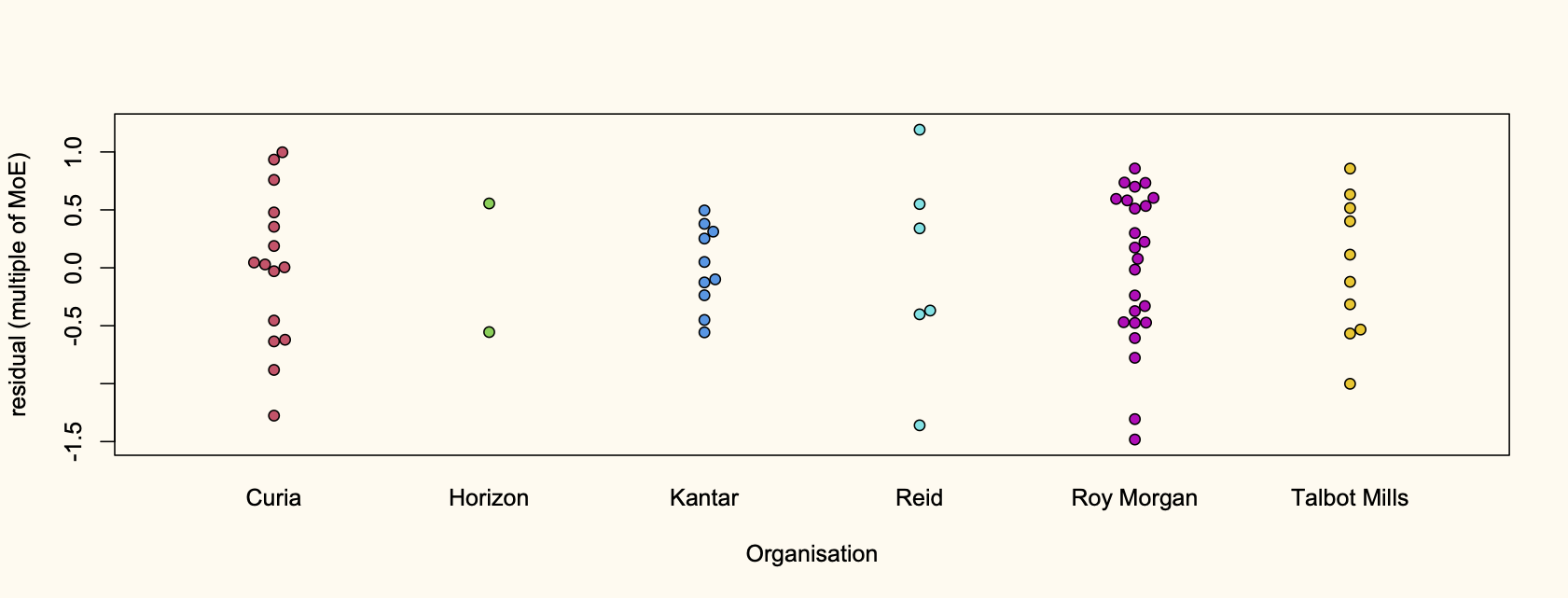

The wastewater data has the advantage of including everyone in a set of cities and towns, adding up to the majority of the country; everybody poops. It has the disadvantage of not directly measuring cases or infections. The wastewater data tells us how many Covid viral fragments are in the wastewater. How that relates to infections depends on how many viral fragments each person sheds and how many of these make it intact to the collection point. This isn’t known — it’s probably different for different people, and might depend on vaccination and previous infections and which variant you have and age and who knows what else. However, the population average probably changes slowly over time, so if the number of viral fragments is going up this week, the number of active infections is probably going up, and if the number of viral fragments is going down, the number of active infections is probably going down.

Using the wastewater data, we can see that the ratio of reported cases to wastewater viral fragments has been going down slowly since the first Omicron peak. We’ve got a lot of other reasons to think testing and reporting is going down, so that’s a good explanation. It’s especially a good explanation because most of the other reasons for a change (eg, less viral shedding in second infections) would make the ratio go up instead. So, with the ratio of reported cases to viral fragments going down by 25% it makes sense to estimate that the ratio of reported cases to infections has gone down 25%.

Now all we need is to know what the reporting rate was at the peak. Which we don’t know. It couldn’t have been much higher than 60%, because some infections won’t have been symptomatic and some tests will have been false negatives. If it was 60%, it’s down to roughly 40% now. If it was lower than that at the peak, it’s lower than 40% now. You could likely get somewhat better guesses by combining the epidemic models and the wastewater data, but it’s always going to be difficult.

You might think that hospitalisation and death data are less subject to under-reporting. This is true, but the proportion of infections leading to hospitalisation is (happily) going down due to vaccination and prior infection, and the proportion leading to death is (happily) going down even more due to better treatment. On top of those changes, hospitalisation and death lag infection by quite a long time. The hospitalisation rate and death rate are directly important for policy, but they aren’t good indicators of current infections.

So, we’re a bit stuck. We can detect increases or decreases in infections fairly reliably with wastewater data, but absolute numbers are hard. This is even more true for other diseases — in the future, there will hopefully be wastewater monitoring for influenza and maybe RSV, where we expect the case reporting rate to be massively lower than it is for Covid.

To get good absolute numbers we need a measurement of the actual infection rate in a random sample of people. That’s planned — originally for July 2022, but the timetable keeps slipping. A prevalence survey is a valuable complement to the wastewater data; it gives absolute numbers that can be used to calibrate the more precise and geographically detailed relative numbers from the wastewater. Until we have a prevalence survey, the ESR dashboard is a good way to get a feeling for whether Covid infections are going up or down, and how fast.

Recent comments on Thomas Lumley’s posts