The Herald has a good report of research to be published in Science tomorrow, studying earthquakes on the Alpine fault. By looking at a river site where quakes disrupted water flow, interrupting peat deposition with a layer of sediment, the researchers could get a history of large quakes going back 8000 years. They don’t know exactly how big any of the quakes were, but they were big enough to rupture the surface and affect water flow, so at the least they would mess up roads and bridges, and disrupt tourism.

Based on this 8000-year history, it seems that the Alpine fault is relatively regular in how often it has earthquakes: more so than the San Andreas Fault in California, for example. Since the fault has major earthquakes about every 330 years, and the most recent one was 295 years ago, it’s likely to go off soon. Of course, ‘soon’ here doesn’t mean “before the Super 15 final”; the time scales are a bit longer than that.

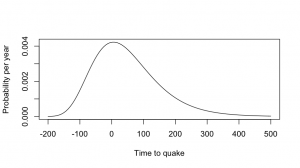

We can look at some graphs to get a rough idea of the risk over different time scales. I’m going to roughly approximate the distribution of the times between earthquakes by a log-normal distribution, that is, the logarithm of the times has a Normal distribution.

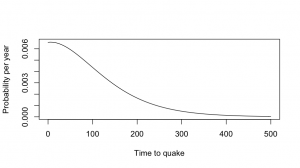

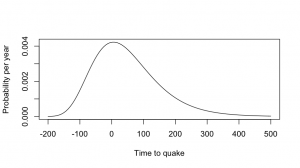

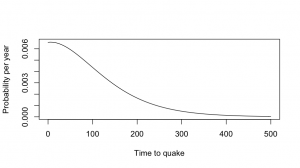

This is a simple and reasonable model for time intervals, and it also has the virtue of giving the same answers that the researchers gave to the press. Using the estimates of mean and variation in the paper, the distribution of times to the next big quake looks like the first graph. The quake is relatively predictable, but “relatively” in this sense means “give or take a century”.

This is a simple and reasonable model for time intervals, and it also has the virtue of giving the same answers that the researchers gave to the press. Using the estimates of mean and variation in the paper, the distribution of times to the next big quake looks like the first graph. The quake is relatively predictable, but “relatively” in this sense means “give or take a century”.

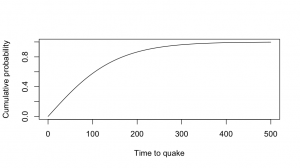

Now, by definition, the next big quake hasn’t happened yet, so we can throw away the part of this distribution that’s less than zero, and rescale the distribution so it still adds up to 1, getting the second graph. The chance of a big quake is a bit less than 1% per year — not very high, but certainly worth doing something about. For comparison, it’s about 2-3 times the risk per year of being diagnosed with breast cancer for middle-aged women.

Now, by definition, the next big quake hasn’t happened yet, so we can throw away the part of this distribution that’s less than zero, and rescale the distribution so it still adds up to 1, getting the second graph. The chance of a big quake is a bit less than 1% per year — not very high, but certainly worth doing something about. For comparison, it’s about 2-3 times the risk per year of being diagnosed with breast cancer for middle-aged women.

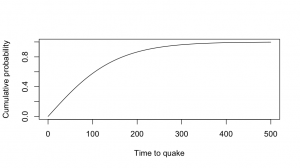

The Herald article (and the press release) quote a 30% chance over 50 years, which matches this lognormal model. At 80 years there’s a roughly 50:50 chance, and if we wait long enough the quake has to happen eventually.

The risk of a major quake in any given year isn’t all that high, but the problem isn’t going away and the quake is going to make a serious mess when it happens.

[Update: Stuff also has an article. They quote me (via the Science Media Centre) , but I’m just describing one of the graphs in the paper: Figure 3B, if you want to go and check that I can do simple arithmetic]

This is a simple and reasonable model for time intervals, and it also has the virtue of giving the same answers that the researchers gave to the press. Using the estimates of mean and variation in the paper, the distribution of times to the next big quake looks like the first graph. The quake is relatively predictable, but “relatively” in this sense means “give or take a century”.

This is a simple and reasonable model for time intervals, and it also has the virtue of giving the same answers that the researchers gave to the press. Using the estimates of mean and variation in the paper, the distribution of times to the next big quake looks like the first graph. The quake is relatively predictable, but “relatively” in this sense means “give or take a century”.