Stuff has a new election ‘poll of polls’.

The Stuff poll of polls is an average of the most recent of each of the public political polls in New Zealand. Currently, there are only three: Roy Morgan, Colmar Brunton and Reid Research.

When these companies release a new poll it replaces their previous one in the average.

The Stuff poll of polls differs from others by giving weight to each poll based on how recent it is.

All polls less than 36 days old get equal weight. Any poll 36-70 days old carries a weight of 0.67, 70-105 days old a weight 0.33 and polls greater than 105 days old carry no weight in the average.

In thinking about whether this is a good idea, we’d need to first think about what the poll is trying to estimate and about the reasons it doesn’t get that target quantity exactly right.

Officially, polls are trying to estimate what would happen “if an election were held tomorrow”, and there’s no interest in prediction for dates further forward in time than that. If that were strictly true, no-one would care about polls, since the results would refer only to the past two weeks when the surveys were done.

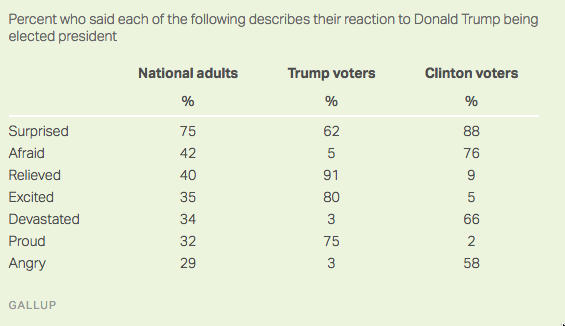

A poll taken over a two-week period is potentially relevant because there’s an underlying truth that, most of the time, changes more slowly than this. It will occasionally change faster — eg, Donald Trump’s support in the US polls seems to have increased after James Comey’s claims about Clinton’s emails in the US, and Labour’s support in the UK polls increased after the election was called — but it will mostly change slower. In my view, that’s the thing people are trying to estimate, and they’re trying to estimate it because it has some medium-term predictive value.

In addition to changes in the underlying truth, there is the idealised sampling variability that pollsters quote as the ‘margin of error’. There’s also larger sampling variability that comes because polling isn’t mathematically perfect. And there are ‘house effects’, where polls from different companies have consistent differences in the medium to long term, and none of them perfectly match voting intentions as expressed at actual elections.

Most of the time, in New Zealand — when we’re not about to have an election — the only recent poll is a Roy Morgan poll, because Roy Morgan polls more much often than anyone else. That means the Stuff poll of polls will be dominated by the most recent Roy Morgan poll. This would be a good idea if you thought that changes in underlying voting intention were large compared to sampling variability and house effects. If you thought sampling variability was larger, you’d want multiple polls from a single company (perhaps downweighted by time). If you thought house effects were non-negligible, you wouldn’t want to downweight other companies’ older polls as aggressively.

Near an election, there are lots more polls, so the most recent poll from each company is likely to be recent enough to get reasonably high weight. The Stuff poll is then distinctive in that it complete drops all but the most recent poll from each company.

Recency weighting, however, isn’t at all unique to the Stuff poll of polls. For example, the pundit.co.nz poll of polls downweights older polls, but doesn’t drop the weight to zero once another poll comes out. Peter Ellis’s two summaries both downweight older polls in a more complicated and less arbitrary way; the same was true of Peter Green’s poll aggregation when he was doing it. Curia’s average downweights even more aggressively than Stuff’s, but does not otherwise discard older polls by the same company. RadioNZ averages the only the four most recent available results (regardless of company) — they don’t do any other weighting for recency, but that’s plenty.

However, another thing recent elections have shown us is that uncertainty estimates are important: that’s what Nate Silver and almost no-one else got right in the US. The big limitation of simple, transparent poll of poll aggregators is that they say nothing useful about uncertainty.