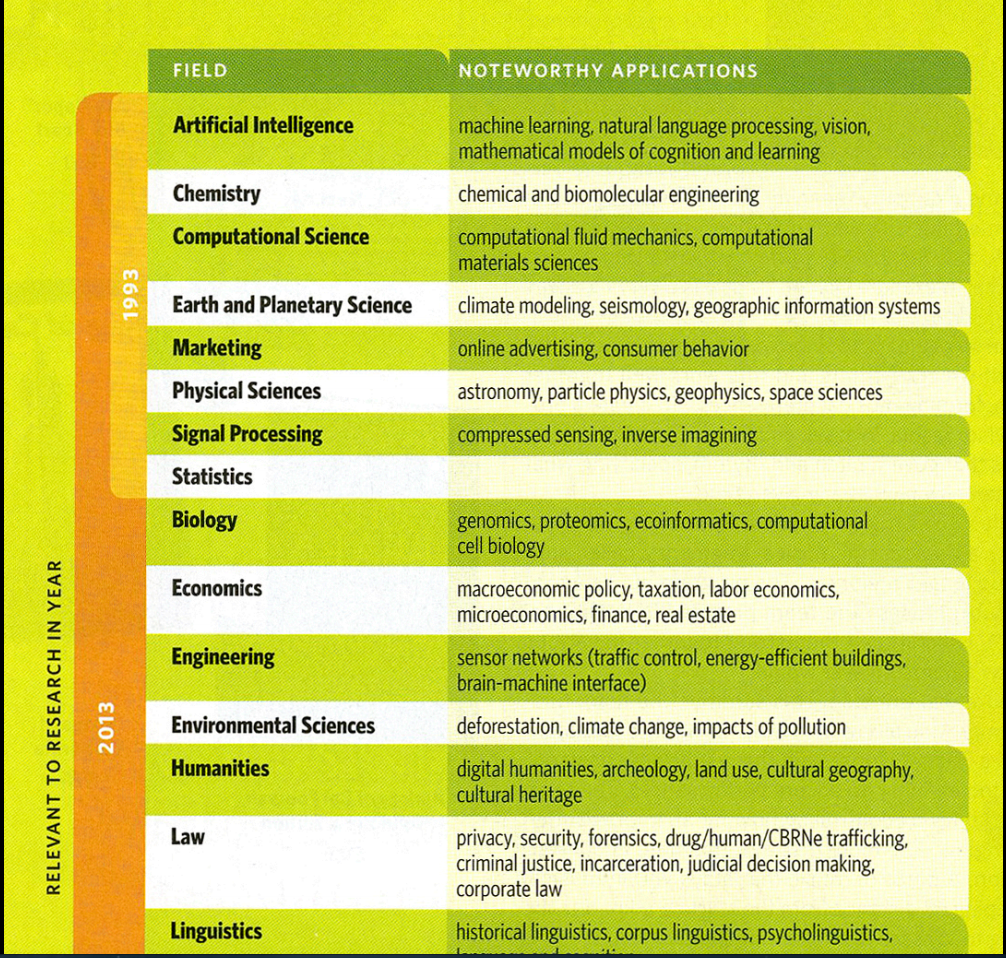

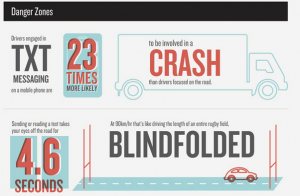

I couldn’t have put it less clearly myself, but if you follow the link, you do get to one of those tall, skinny totem-pole infographics, and the relevant chunk of it says

What it doesn’t do is tell you why they believe this. Neither does anything else on the web page, or, as far as I can tell, the whole set of pages on distracted driving.

A bit of Googling turns up this New York Times story from 2009

The new study, which entailed outfitting the cabs of long-haul trucks with video cameras over 18 months, found that when the drivers texted, their collision risk was 23 times greater than when not texting

That sounds fairly convincing, though the story also mentions that a study of college students using driving simulators found only an 8-fold increase, and notes that texting might well be more dangerous when driving a truck than a car.

The New York Times doesn’t link, but with the name of the principal researcher we can find the research report and Table 17, on page 44 does indeed include the number 23. There’s a pretty huge margin of error: the 95% confidence interval goes down to 9.7. More importantly, though, the table header says “Likelihood of a Safety-Critical Event”.

A “Safety-Critical Event” could be a crash, but it could also be a near-crash, or a situation where someone else needed to alter their behaviour to avoid a crash, or an unintentional lane change. Of the 4452 “safety-critical events”, 21 were crashes. There were 31 safety-critical events observed during texting.

So, the figure of 23 is not actually for crashes, but it is at least for something relevant, measured carefully. Texting, as would be pretty obvious, isn’t a good thing to do when you’re driving. And even if you’re totally rad,hip, and cool like the police tweetwallah, it’s ok to link. Pretend you’re part of the Wikipedia generation or something.