Quake prediction: how good does it need to be?

From a detailed story in the ChCh Press, (via Eric Crampton) about various earthquake-prediction approaches

About 40 minutes before the quake began, the TEC in the ionosphere rose by about 8 per cent above expected levels. Somewhat perplexed, he looked back at the trend for other recent giant quakes, including the February 2010 magnitude 8.8 event in Chile and the December 2004 magnitude 9.1 quake in Sumatra. He found the same increase about the same time before the quakes occurred.

Heki says there has been considerable academic debate both supporting and opposing his research.

To have 40 minutes warning of a massive quake would be very useful indeed and could help save many lives. “So, why 40 minutes?” he says. “I just don’t know.”

He says if the link were to be proved more firmly in the future it could be a useful warning tool. However, there are drawbacks in that the correlation only appears to exist for the largest earthquakes, whereas big quakes of less than magnitude 8.0 are far more frequent and still cause death and devastation. Geomagnetic storms can also render the system impotent, with fluctuations in the total electron count masking any pre-quake signal.

Let’s suppose that with more research everything works out, and there is a rise in this TEC before all very large quakes. How much would this help in New Zealand? The obvious place is Wellington. A quake over 8.0 magnitude has been observed in the area in 1855, when it triggered a tsunami. A repeat would also shatter many of the earthquake-prone buildings. A 40-minute warning could save many lives. It appears that TEC shouldn’t be that expensive to measure: it’s based on observing the time delays in GPS satellite transmissions as they pass through the ionosphere, so it mostly needs a very accurate clock (in fact, NASA publishes TEC maps every five minutes). Also, it looks like it would be very hard to hack the ionosphere to force the alarm to go off. The real problem is accuracy.

The system will have false positives and false negatives. False negatives (missing a quake) aren’t too bad, since that’s where you are without the system. False positives are more of a problem. They come in two forms: when the alarm goes off completely in the absence of a quake, and when there is a quake but no tsunami or catastrophic damage.

Complete false predictions would need to be very rare. If you tell everyone to run for the hills and it turns out to be sunspots or the wrong kind of snow, they will not be happy: the cost in lost work (and theft?) would be substantial, and there would probably be injuries. Partial false predictions, where there was a large quake but it was too far away or in the wrong direction to cause a tsunami, would be just as expensive but probably wouldn’t cause as much ill-feeling or skepticism about future warnings.

Now for the disappointment. The story says “there has been considerable academic debate”. There has. For example, in a (paywalled) paper from 2013 looking at the Japanese quake that prompted Heki’s idea

A detailed analysis of the ionospheric variability in the 3 days before the earthquake is then undertaken, where a simultaneous increase in foF2 and the Es layer peak plasma frequency, foEs, relative to the 30-day median was observed within 1 h before the earthquake. A statistical search for similar simultaneous foF2 and foEs increases in 6 years of data revealed that this feature has been observed on many other occasions without related seismic activity. Therefore, it is concluded that one cannot confidently use this type of ionospheric perturbation to predict an impending earthquake.

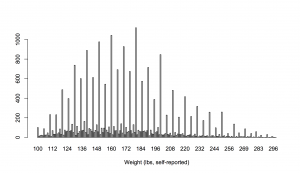

In translation: you need to look just right to see this anomaly, and there are often anomalies like this one without quakes. Over four years they saw 24 anomalies, only one shortly before a quake. Six complete false positives per year is obviously too many. Suppose future research could refine what the signal looks like and reduce the false positives by a factor of ten: that’s still evacuation alarms with no quake more than once every two years. I’m pretty sure that’s still too many.