Against sampling?

Stuff has a story from the Sydney Morning Herald, on the claim that smartphones will be obsolete in five years. They don’t believe it. Neither do I, but that doesn’t mean we agree on the reasons. The story thinks not enough people were surveyed:

The research lab surveyed 100,000 people across its native Sweden and 39 other countries.

With around 1.9 billion smartphone users globally, this means ConsumerLab covered just 0.0052 per cent of active users for its study.

This equates to about 2500 in each country; the population of Oberon

If you don’t recognise Oberon, it’s a New South Wales town slightly smaller than Raglan.

Usually, the Sydney Morning Herald doesn’t have such exacting standards for sample size. For example, their recent headline “GST rise backed by voters if other taxes cut: Fairfax-Ipsos poll” was based on 1402 people, about the population of Moerewa.

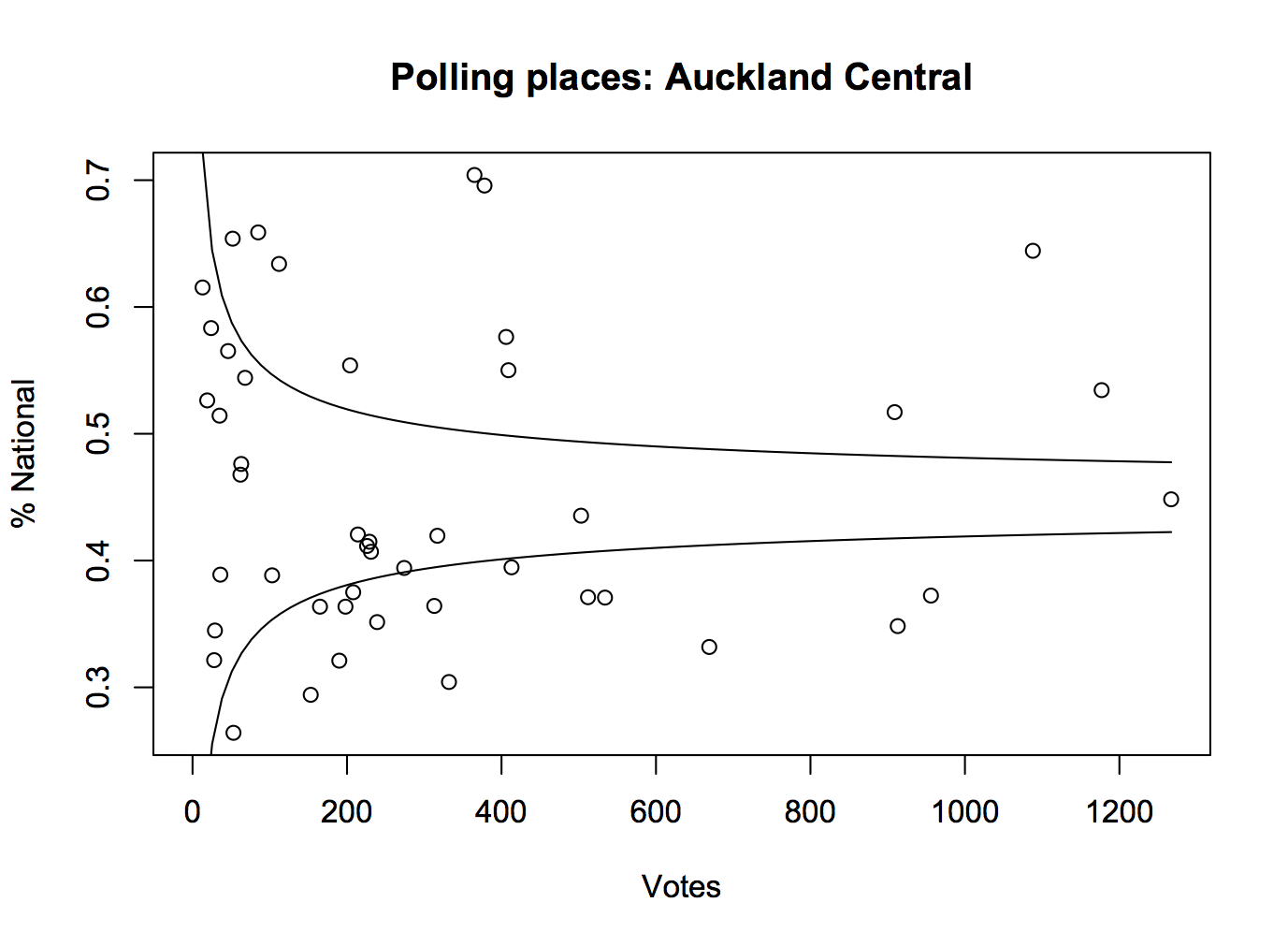

The survey size is plenty large enough if it was done right. You don’t, as the saying goes, have to eat the whole egg to know that it’s rotten. If you have a representative sample from a population, the size of the population is almost irrelevant to the accuracy of survey estimates from the sample. That’s why opinion polls around the world tend to sample 1000-2000 people, even though that’s 0.02-0.04% of the population of New Zealand, 0.004%-0.009% of the population of Australia, or 0.0003-0.0006% of the population of the USA.

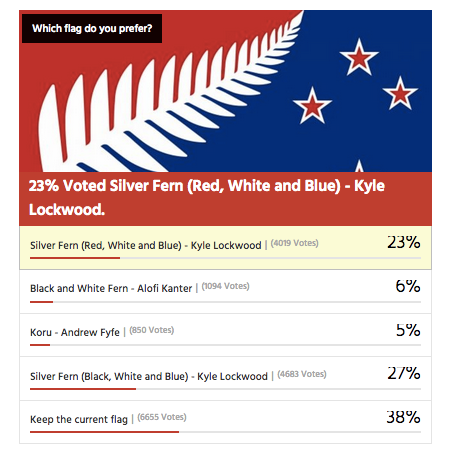

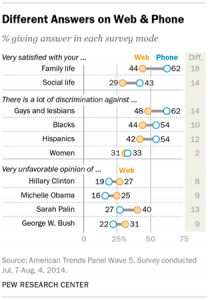

What’s important is whether the survey is representative, which can be achieved either by selecting and weighting people to match the population, or by random sampling, or in practice by a mixture of the two. Unfortunately, the story completely fails to tell us.

Looking at the Ericsson ConsumerLab website, it doesn’t seem that the survey is likely to be representative — or at least, there aren’t any details that would indicate it is. This means it’s like, say, the Global Drug Survey, which also has 100,000 participants, out of over 2 billion people worldwide who use alcohol, tobacco, and other drugs, and which Stuff and the SMH have reported on at great length and without the same skepticism.